Python3.7爬取智联招聘数据,观察智联招聘的页面就可以看到是有个接口,获取的数据,直接拼接参数请求接口就可以的,切换头信息和抓取中 sleep 一下,这样能躲过去。执行时候,缺少的包

pip install requests这样安装就可以

SQL结构

CREATE TABLE `jobs` (

`id` int(11) NOT NULL AUTO_INCREMENT COMMENT 'id',

`keyword` varchar(255) DEFAULT NULL COMMENT '关键词',

`city` varchar(255) DEFAULT NULL COMMENT '城市',

`company` varchar(255) DEFAULT NULL COMMENT '公司名称',

`size` varchar(20) DEFAULT NULL COMMENT '规模',

`type` varchar(10) DEFAULT NULL COMMENT '性质',

`company_url` varchar(255) DEFAULT NULL COMMENT '公司链接',

`eduLevel` varchar(20) DEFAULT NULL COMMENT '教育程度',

`emplType` varchar(20) DEFAULT NULL COMMENT '职业类型',

`jobName` varchar(50) DEFAULT NULL COMMENT '工作名称',

`jobTag` varchar(200) DEFAULT NULL COMMENT '福利',

`jobType` varchar(200) DEFAULT NULL COMMENT '方向',

`position` text COMMENT '职位详情',

`positionURL` varchar(200) DEFAULT NULL COMMENT '招聘链接',

`rate` varchar(10) DEFAULT NULL COMMENT '反馈率',

`salary` varchar(20) DEFAULT NULL COMMENT '工资',

`workingExp` varchar(10) DEFAULT NULL COMMENT '工作经验',

`city_code` varchar(10) DEFAULT NULL COMMENT '城市编号',

`create_time` datetime DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=4321 DEFAULT CHARSET=utf8mb4;

jobs.py

# -*- coding: utf-8 -*-

import pymysql

import requests

import time

from fake_useragent import UserAgent

import urllib.parse

from requests_html import HTMLSession

def parse_page(keyword, url, city_code):

try:

ua = UserAgent(verify_ssl=False) # verify_ssl = false 能避免报错

headers = {'User-Agent': ua.random}

print(headers)

response = requests.get(url, headers=headers).json()

result = response['data']['results']

for r in result:

keyword = urllib.parse.unquote(keyword)

city = r['city']['display']

company = r['company']['name']

size = r['company']['size']['name']

type = r['company']['type']['name']

company_url = r['company']['url']

eduLevel = r['eduLevel']['name']

emplType = r['emplType']

jobName = r['jobName']

jobTag = r['jobTag']['searchTag']

jobType = r['jobType']['display']

positionURL = r['positionURL'] #招聘链接

rate = r['rate'] #反馈率

salary = r['salary'] #工资

workingExp = r['workingExp']['name'] #工作经验

# 抓取职位详情

session = HTMLSession()

detail = session.get(positionURL)

position = detail.html.find('.pos-ul', first=True).text

insert_sql = """insert into jobs(keyword,city,company,size,type,company_url,eduLevel,emplType,jobName,jobTag,jobType,position,positionURL,rate,salary,workingExp,city_code) values ('{}','{}','{}','{}','{}','{}','{}','{}','{}','{}','{}','{}','{}','{}','{}','{}','{}'); """.format(keyword,city,company,size,type,company_url,eduLevel,emplType,jobName,jobTag,jobType,position,positionURL,rate,salary,workingExp,city_code)

try:

cur.execute(insert_sql)

conn.commit()

except Exception as e:

print(e)

pass

except Exception as e:

print(e)

pass

def parse_main(url, pages, city_code, job):

for page in range(pages):

p = page*90

url_r = url.format(page=p, city_code=city_code,job=job)

parse_page(job, url_r, city_code)

#time.sleep(5)

if __name__ == '__main__':

conn = pymysql.connect(

host="127.0.0.1",

user="root",

password="123456",

db="business",

port=3306,

charset="utf8"

)

cur = conn.cursor()

url = "https://fe-api.zhaopin.com/c/i/sou?start={page}&pageSize=90&cityId={city_code}&industry=10100&salary=0,0&workExperience=-1&education=-1&companyType=-1&employmentType=-1&jobWelfareTag=-1&kw={job}&kt=3&=0&_v=0.33977872&x-zp-page-request-id=b0434b03d11e4b9daf4cf3a887fbd121-1547573058264-851670"

pages = 3

job = '%E7%A0%94%E5%8F%91' #研发

city_code_list = ['530','765','538','763']

# city_code='530' #北京:530; 全国:489,深圳:765,上海:538,广州:763

for city_code in city_code_list:

parse_main(url,pages,city_code,job)

conn.close()

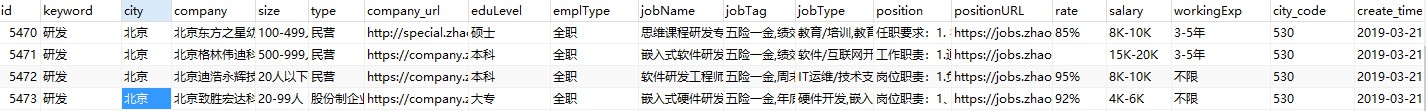

先建立对应的数据库,我在本地的数据库是 business, 然后在命令行下执行py jobs.py 进行爬取数据,测试爬取正常,效果图:

参考链接:Github 地址